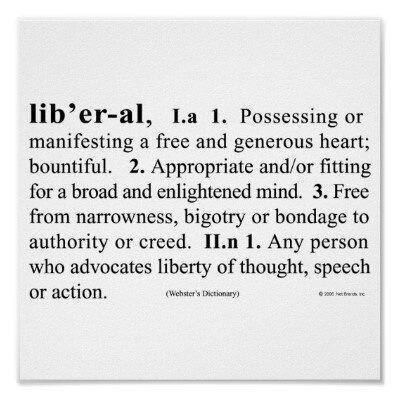

I came across this the other day:

I had seen it before, but this time I decided to fact-check it. As it turns out, both Webster’s New World Dictionary and the Oxford English Dictionary not only corroborate this definition but go much further.

This made me wonder how on Earth this word has been made to mean something bad to so many people. The way some pundits and talking heads spit out the word “liberal” as though it’s a piece of rancid cheese, you’d think being liberal is downright un-American. And yet, the exact opposite is true. This country was founded on the most liberal principles known to humanity at the time. In fact, they were downright revolutionary! (No pun intended.) Imagine! Government by the people instead of a king! It was practically heresy.

And even now those people who most vilify the word “liberal” nonetheless continually espouse liberal principles — such as tolerance of others, favoring individual freedom, broad-mindedness, and democracy — even as they demonstrate exactly the opposite in their words and actions. Yes, I’m talking about you, Faux News.

The word “liberal” literally defines the United States of America, both in its founding and in its often-stated principles. So how did this fine little word come to mean something so foul to so many ill-informed people?

One Response to Liberal